Voice Authentication Accuracy (13 Aug 2021)

A Bit of Background#

I get a lot of repeating questions when I try to explain how Voice Authentication works. One of the questions that deserves special treatment is how does the system make a decision on whether the caller's voice passes the test or not? Furthermore, if the caller does not pass the test, what does it actually mean? And what is the Accuracy of Omni Authentication (or any other Voice Biometrics solution for that argument)?

It is a tricky one and it takes quite a bit of effort to explain so I've decided to dedicate a post talking about the inner mechanisms of Voice Biometrics.

In this post we discuss the following Key Points:

- The mechanics of Voice Authentication

- Confidence Score

- Authentication Threshold

- What Voice Authentication Accuracy is

Voiceprinting#

Voiceprint is the fundamental component of any Voice Authentication solution. The purpose of Voiceprints is simple: it is the same as fingerprints but for voice. This, of course, assumes that our voices are at least as unique as our fingerprints. While there is no 100% reliable proof that it is the case (at least I am not aware of any), there is plenty of research on the topic that has been conducted on a fairly large body of voice samples produced by a large number of people (tens of thousands). The purpose of such research is to establish whether it is possible to differentiate between all speakers by sampling their voice. Turns out it is possible to do with a degree of ... well, accuracy. But before I talk about accuracy, let's consider what "voice sampling" means.

Say we have two different 7 second recordings of a person's speech. How would we go about comparing them to establish whether they come from the same individual? There are quite a few approaches to this task that are rather involved in a mathematical sense. Having said that, they can be split into two broad categories:

- Text-Dependent Methods - those rely that the two voice recordings contain the same phrase (in the same language)

- Text-Independent Methods - used in Omni Authentication - the recordings being compared do not have to be the same phrase or language

Putting maths aside, from the commercial sense, the Text-Independent approach is far more superior to its couterpart. Indeed, when implementing Voice Authentication system into a real-life Contact Center, we have to face its day-to-day imperfections: customers might be distracted and what they say may not necessarily be the same exact phrase that the system expects them to say. Two phrases how are you? and how's things? are completely different even though they carry the same meaning - you get the idea.

That is where Voiceprints come in handy. A Voiceprint is a bunch of figures that describe the characteristics of the voice itself. There's plenty to describe in a human voice and the Voiceprints tend to be a long sequence of numbers, but the intuition behind them is that these numbers capture such things as speech inflections (as opposed to speech itself) and acoustics (e.g. timbre) pertinent to a specific individual (hence biometrics) when they talk.

Voiceprints are generated by analysing a sample (or multiple samples) of human speech. The Voiceprints must comply with a necessary condition that if the Voiceprints are generated off speech samples that come from the same speaker, when compared, they must be the same (or very close to each other at least), on the one hand. On the other, Voiceprints that come from different speakers must be further apart from each other.

To illustrate, I have collected a number of voice samples from 13 people (some of the people speak in multiple languages with various levels of background noise and audio distortions) and used Omni Authentication to generate Voiceprints based on those samples. There are 3-14 samples per person.

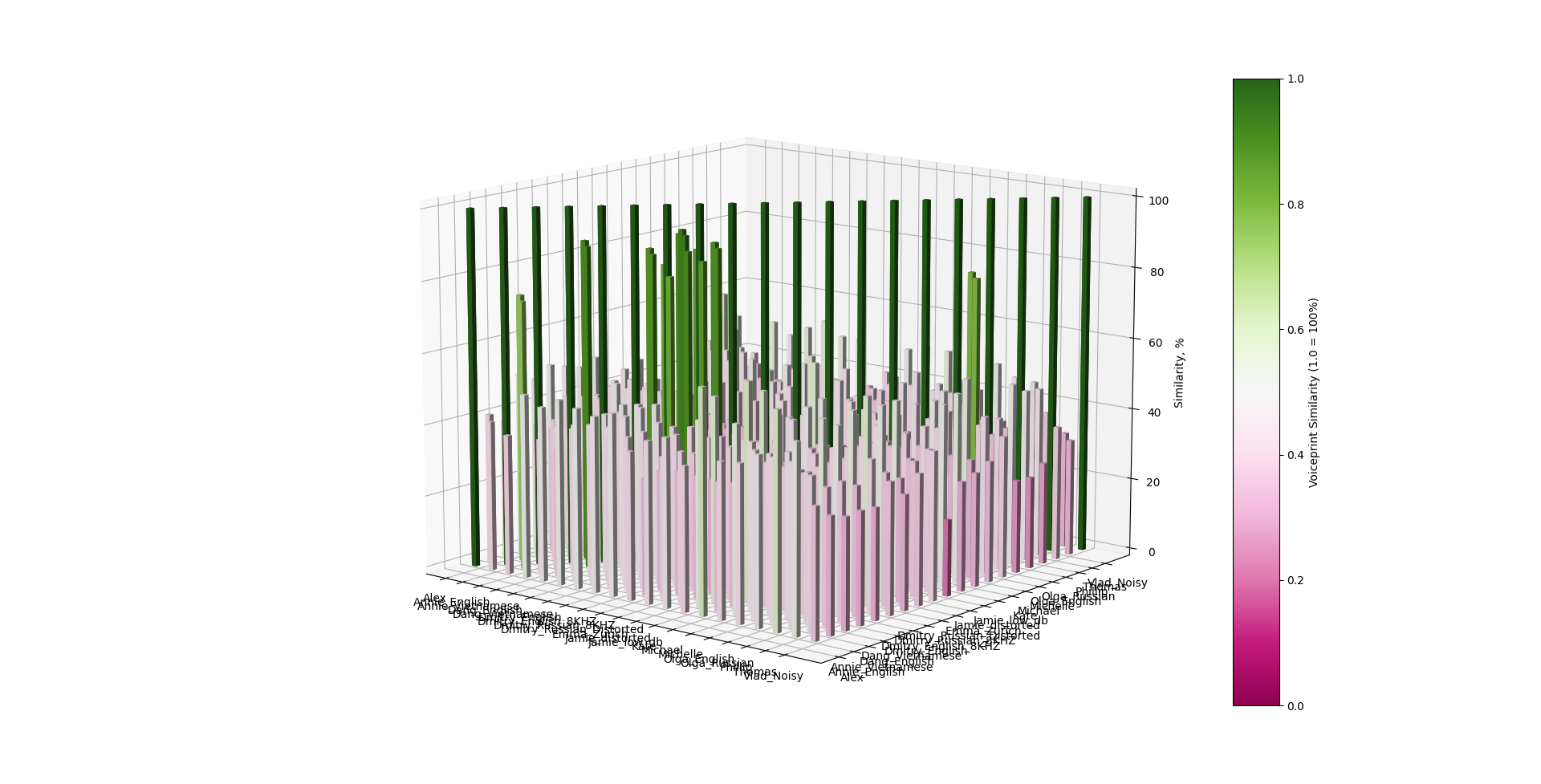

The below figure (click on image to enlarge) shows how the system clusters voiceprints from the same speakers together:

Figure 1

As we can see, the main contributor that determines the proximity of the Voiceprints from one another is the speaker itself. Even when the speaker uses different languages or the audio quality is significantly different, the system still tends to group those Voiceprints close to each other.

Confidence Score & Authentication Threshold#

Take-away:

- The recommended "all-rounder" Authentication Threshold is 70%

Great, so now that we know what a Voiceprint is and how it is used to distinguish between the speakers, let's talk about the Confidence Score and how we decide if the voiceprint belongs to a particular person.

Simply put, Confidence Score is a distance between Voiceprints in a sense shown on Figure 1 (simply a distance between the dots that represent Voiceprints). If this distance is small enough, we say that the Confidence is high and we conclude that the voice is a match with a speaker.

This is where the Authentication Threshold comes in handy. As you can see on Figure 1, the Voiceprints that belong to the same speaker may still be spaced apart by some Marginal Value. This is because they were generated based on different voice samples (potentially in different languages!). So this Marginal Value is the threshold.

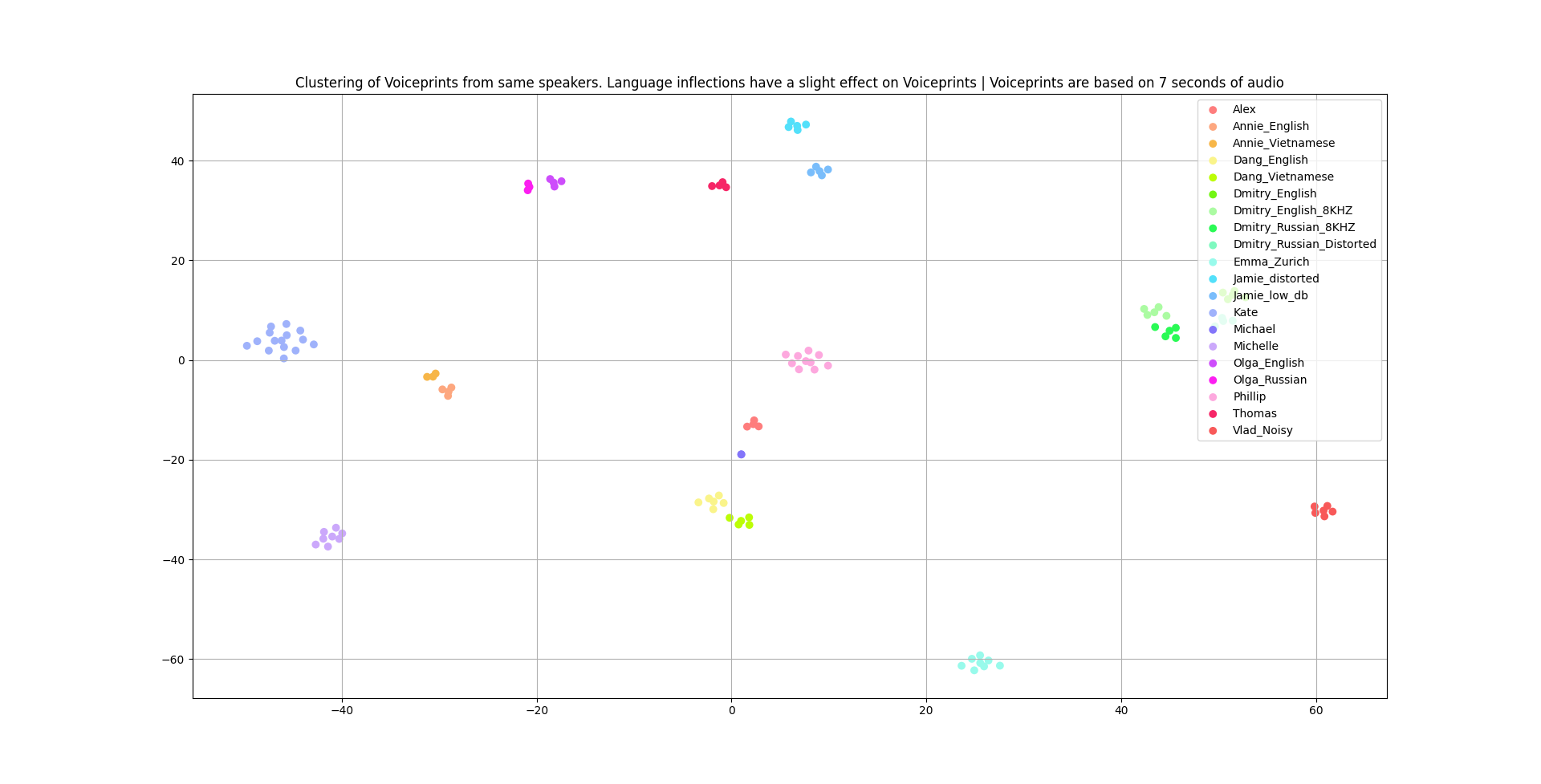

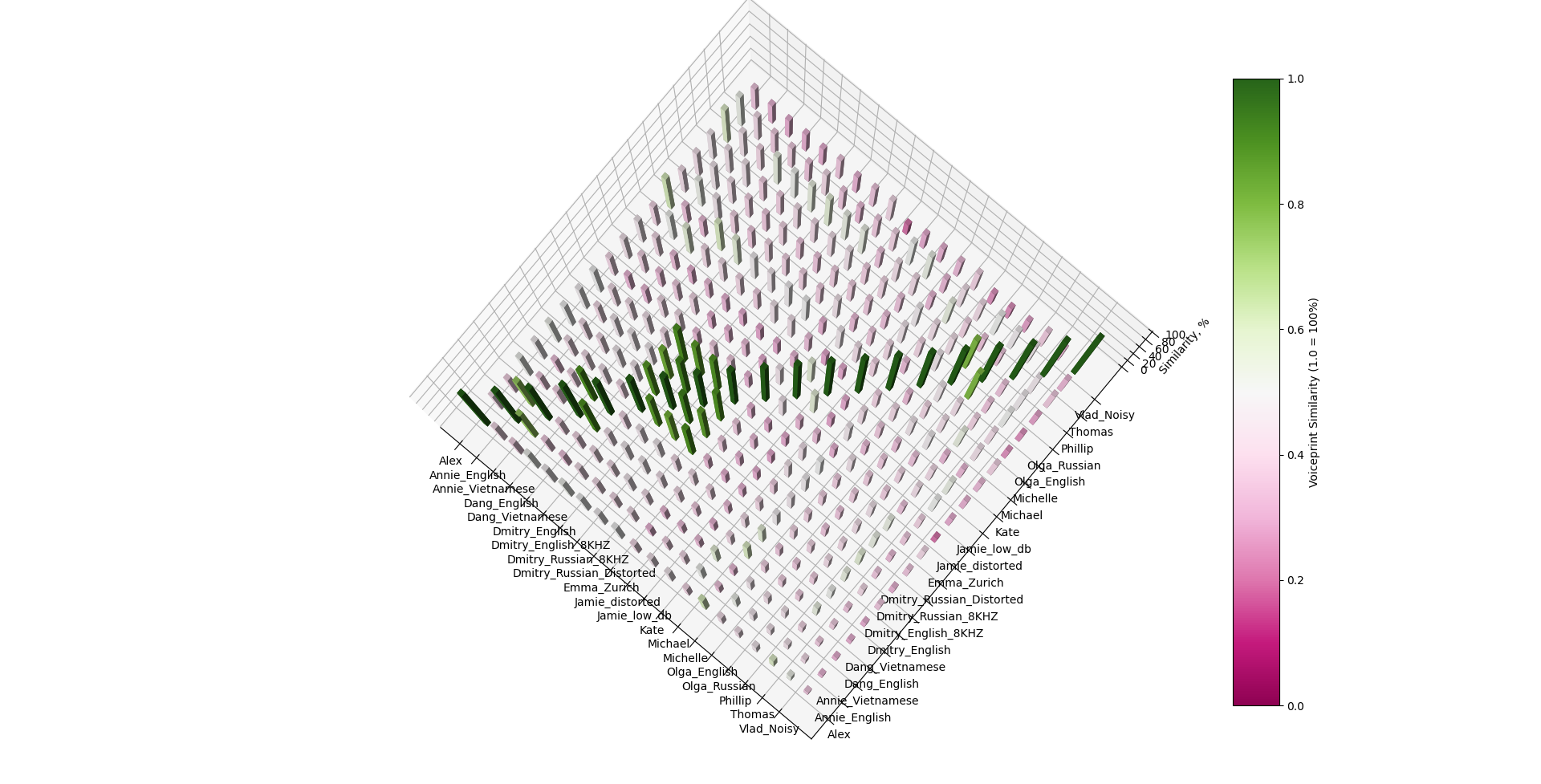

But what should be the value of the Authentication Threshold? Again, to illustrate, I have compared all the Voiceprints used for this write-up and produced another figure (you can click to enlarge) that shows all the combinations of scores (distances) between the speakers:

Figure 2

Based on this graph, the system confidently combines the voiceprints that come from the same speaker together (green bars).

Here's another perspective on the same graph that tells us what value of the Authentication Threshold will work well:

If we choose the Authentication Threshold to be 60%, we will be able to effectively distinguish all people by their Voiceprints regardless of the noise level and the language they use.

Raising the Authentication Threshold to, for example, 80% will render the Voice Authentication system to be more picky in that, some of the speakers that use a different language for enrollment and authentication will not pass the authentication test. Again, the explanation is on the Figures 2 and 3.

Accuracy#

Take-aways:

- Training set: 30M utternaces from 15K multilingual speakers

- Validation set: 13K utterances from 500 multilingual speakers

- EER: 3.9% (see explanation below)

- Accuracy: 96.1%

- Enrollment: at least 20 seconds of speech required

- Verification: at least 7 seconds of speech required

Omni Authentication system employs Machine Learning techniques for Voiceprint generation. What it means is that the system is trained to generate the Voiceprints that have those useful properties described in the Voiceprinting section. The purpose of the training process is simple in principle: you feed voice samples to the system and the system is supposed to say yes, if the voice samples belong to the owner; and no, if the voice samples do not belong to the owner. Whenever the system makes a mistake, it is adjusted so as to correct the mistake and the process repeats until all voice samples are exhausted.

In this process, the Voicebiometrics System can make a mistake by saying yes (False-Positive Scenario) or no (False-Negative Scenario). We calculate the Training Accuracy as a percentage of when the numbers of False-Positives and False-Negatives on the whole set of voice samples is roughly the same (also called EER).

But what happens if the system is given a voice sample that it has not seen before? This is where the Machine Learning technology is very useful. When trained properly, it can use the "knowledge" it's obtained from training and apply it to the voice samples that are "new" to it - the amazing ability that we use every day ourselves called generalization. When we evaluate a Voicebiometrics System, we also use a number of voice samples that are not included in the training set. We refer to it as the validation set. Omni Authentication has been validated on the data from 500 speakers with about 13k utterances.

Omni Authentication has been trained on approx. 30M utterances from around 15K speakers in multiple languages. The measured rate of False-Positives and False-Negatives (EER) has been measured at just under 4%.

As I've demonstrated in a simple experiment with 13 participants, the quality of the generated Voiceprints does depend on a number of things:

- Quality of provided audio (aka sampling rate)

- Presence of background noises / distortions

- Language spoken

... to name a few...

Furthermore, the accuracy will vary depending on the voice sample duration. The shorter the sample, the lower the accuracy. In addition, if there is more than one speaker present on the voice sample, the system will not work.

Some Conslusions#

- Higher values of Authentication Threshold will decrease the rates of False-Positives but increase the rates of False-Negatives thus making the system more secure but less accurate

- Higher values of Authentication Threshold may introduce language dependency

- Omni Authentication is highly precise when run on smaller groups of people

- Generally speaking, Omni Authentication is not suitable for looking up customers by their voice. However, it can still be used with 90%+ accuracy for customer identification. I.e. it will be able to find 9 out of 10 people by their voice, on average